In today’s competitive world, the decisive factor for an application is the “end-user experience”. An application may be built by a competent team and may incorporate interesting features, may have a good UI, but it all will be of no use if it is not able to hold the fort when multiple users access and use it. This is precisely the reason, performance testing should not be taken for granted. Performance testing adds a great deal of value to the overall Quality Assurance process of any organization. However, if not planned and executed properly, it can also lead to issues after the software delivery. The development and QA teams should attach equal importance, like the others, to performance testing while defining a test strategy. Certain baselines have to be established before devising a performance testing plan. This article explores them in detail.

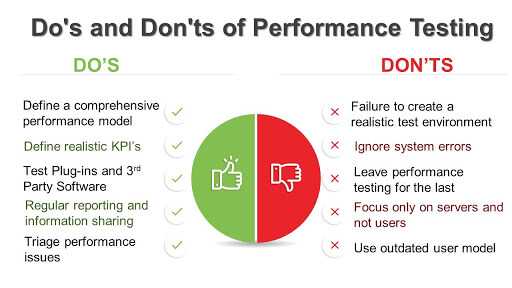

Define a comprehensive performance testing model

Identifying and establishing performance goals in conjunction with business requirements holds the key to defining a comprehensive testing model. These goals could be maximum response time during peak period, state of the system during concurrent user sessions, server utilization statistics, etc. These will help in understanding and deciding the capacity of the system and will aid the relevant stakeholders in taking appropriate business decisions based on the test results.

Define realistic performance KPI’s

Every system has certain Key Performance Indicators (KPI’s) or metrics that are evaluated against the baseline during Performance testing. Based on the type of application being tested, the technical and business stakeholders select which metrics need to be checked and accordingly attach priorities to them. Be realistic and practical while setting KPIs for performance. These benchmarks act as the point of reference during testing and if they are set incorrectly, the whole exercise will be rendered futile.

Test 3rd party plugins and software

Any system is as strong as its weakest link. An application is an ecosystem of various modules, some of them in-house, while some may be from another source. The performance of the whole system will get affected if the 3rd party plugin is not able to meet the desired benchmarks. Hence, it is imperative to test them as well individually, and also as a part of the whole system.

Regular reporting and information sharing

Regular reporting and sharing of information across the board are extremely important. It ensures that all the stakeholders, business and technical, are on the same page. Any anomaly should be immediately reported and analyzed at all levels to understand the root cause of the reported issue. This not only expedites the whole feedback loop but also helps in improving the overall quality of the product.

Triage Performance Issues

In a large-sized project, especially during multiple sprints, defect triaging acts as a boon by helping the teams to focus on the “right” defects. It helps in preparing a process for the testers and developers to fix maximum defects, by prioritizing them on the basis of predefined performance KPI’s.

Failure to create a realistic test environment

A software could perform very well in the test environment, but there is a slight chance that it is not up to the mark in the real usage environment. This could be a consequence of the failure in simulating a realistic test environment. For example, during the holiday season, a shopping website may handle multiple transactions by multiple users at the same time. If network bandwidth and CPU performance are not taken into consideration, then the software will slow down significantly. Hence, it is vital to have a test environment that closely emulates the environment in which the software will eventually function, with all possible load scenarios taken into consideration.

Ignoring system errors

System errors are the indicators of unpredictable underlying issues. For instance, there are times when the response time for software is perfect under load conditions, but a stack overflow error may occur leading to a failure. These errors may or may not be replicated, but that does not mean that they should not be investigated. Ignoring such errors due to non-replication while running multiple tests leaves a chink in the armor.

Leave Performance testing for the last

Attaching low priority to performance testing while focusing on getting the functionality right is a big mistake. With an iterative approach towards development, it becomes increasingly difficult and costly to fix issues later. It is always advisable to implement performance testing as a part of unit tests. It aids in quick identification and rectification within the same cycle, thus saving a significant amount of time and cost.

Focusing only on servers and not users

Do not overlook the user behavior, because that is equally important. There are times when the server tests are successful, but when the users actually access the system, there could be multiple possibilities for their usage pattern. There is always an element when the user experience can be a deterministic factor in performance. For example, a user may be located in a location with a cluster of comparatively slow servers, or he/she may be accessing the application via a handheld device. Analyzing the usage pattern and the target user group can be incorporated as a metric while defining the KPI’s.

Use outdated user load model

Not all days are the same, and the usage patterns keep changing. Keeping track of user load is vital to ensure that the application scalability model is up to date.

A well-rounded performance testing strategy ensures that a robust end-product is delivered to the customers, provided the best practices are implemented while defining test strategies and common mistakes are avoided. Choosing the right testing tool is the key to achieve this.

Webomates’ AI-based tool helps expedite Performance Testing by reusing the existing functional test cases and converting them to performance scripts. They validate the performance run during interim periods or nightly builds, as and when required. With these performance runs, various load metrics like request and response time, latency, etc can be easily validated. This further helps in identifying performance issues, if any. Also, incorporating these tests with the functional tests enables the Shift Left approach, thus expediting the builds to production post all the validations with respect to performance.

If this has piqued your interest regarding Webomates’ CQ service and you want to know more, then please click here and schedule a demo, or reach out to us at info@webomates.com.

If you like this blog series, then please like/follow us Webomates or Aseem.

Test Smarter, Not Harder: Get Your Free Trial Today!

Start Free Trial

Leave a Reply