In Greek Mythology there is a fire breathing female monster with a lion’s head, a goat’s body and a serpent’s tail. This is called a chimera. The term chimera has also come to mean a thing that is hoped or wished for but is in fact illusory or impossible to achieve.

I often hear people make the statement that automation testing is going to require fewer resources in the long term and therefore it makes more sense to automate test cases in ALL situations.

At Webomates one of the questions that we are constantly analyzing is whether it is better to automate/AI automate a test case or whether it is better to use manual testing or crowdsource testing. Not too surprisingly this is not a simple question to answer. Actually, we have found that more often than not this is a chimera! In fact, in many situations you end up using more resources! Throughout Webomates 3 year history we have identified 6 key metrics that we use to decide whether we should automate or AI automate test cases.

I strongly encourage you to post your experiences in the feedback section below. I look forward to hearing from you and to broadening this discussion!

Here is the list of factors that we use to determine whether we should automate a test case or not:

All test cases are not equally as easy to automate.

In fact, we have identified many test cases that manual or crowdsource testing can handle with ease but which require a disproportionately large effort to automate. This usually occurs in two instances:

1. When you have complex UI components that are difficult to automate or

2. dynamic data is being returned so the results are not easily validated

So why swim upstream and automate these test cases? They are hard to create and even harder to maintain.

Automation scripting takes longer to start and is more expensive to maintain than manual testing for the reasons listed below:

In both automation and manual testing test case creation should be carried out.

Maintenance time is also higher for automation as the scripts must either be modified or completely re-written based on the degree of change that has occurred in the test case.

It is easier to write simple English as text in test cases rather than writing the code!

This metric determines how quickly you would like the results of the test case execution to be carried out and what the underlying test execution technique can achieve.

This is where automation-based testing truly shines! At Webomates we have built an automation system that executes an infinite number of test cases in 15 minutes. To know more read article https://www.webomates.com/blog/software-testing/smoke-test/. Many traditional automation systems can complete hundreds or even thousands of test cases in a number of hours.

Manual testing is resource limited. Most trained QA testers can execute about 50 test cases per day. Using these parameters, if a hundred or thousand test cases need to be carried out either a lot of QA people would be needed for a short period of time or test case execution will expand into days or even weeks. The table below outlines the inherent scalability issue that manual testing faces:

| Number of test cases | Number of Manual Testers | ||

| 2 | 5 | 10 | |

| 100 | 1 day | 2/5 day | 1/5 day |

| 500 | 5 days | 2 days | 1 day |

| 1000 | 10 days | 4 days | 2 days |

Crowdsourcing, on the other hand, does not face this limitation. It is able to bring hundreds of testers to a particular task for a limited period of time. But crowdsourcing has other challenges such as special test case writing skills. This is important in order for tasks to be carried out by a crowd sourced worker who may or may not have QA experience.

You may like to read

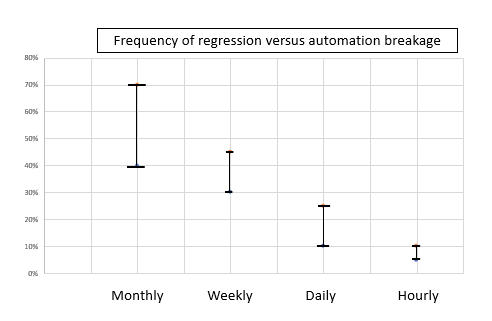

A software development team has a velocity of how many features it can create and defects it can fix per unit time. Thus the longer you wait between releases, the more code has changed between regressions and thus more test cases are modified and automation scripts are broken. Conversely in a more agile environment the number of test cases and test scripts that have changed between one week and the next or between one day and the next is lower.

The table below outlines this inverse relationship based on data that we have compiled with active customers presuming a fixed feature and defect fixing velocity for the development team. Please Note that these are averages within a single order of standard deviation. My point ? There are cases on an infrequent basis that 100% of the automation fails…..but that is an outlier. For example when a major UI feature is being introduced or a framework change is carried or if the login capability for the system is broken and that impacts a large number of test cases.

The figures below show this information in two different ways graphically and in a table. The key point is as the frequency of regression increases most of the time :

| Frequency | Average Automation test case breakage |

| Once a month | 40-70% |

| Once a week | 30-45% |

| Once a day | 10-25% |

| Once an hour | 5-10% |

Each development and QA organization has a stability index for code that is going to be regression tested. If the probability is that there is significant breakage occurring in many builds across test cases the value of the automation scripts drops significantly.

In essence, if the automation scripts are “always broken” then they are obviously not too valuable, as they become a resource and time bottleneck.

This metric is a proxy for how much development is being carried out on the product under test. The more practical version of this metric is

Modified Test cases+New Test casesTotal Test cases

In essence, it’s a metric that identifies in what stage of the software life cycle a product is in.

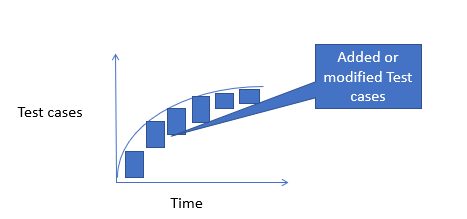

In the initial stages of a brand new product tremendous changes and additions occur across all areas under test. This results in near constant updates for test cases and for automation scripts.

As the product matures certain areas of the product become more constant. For example the development team starts to focus in a particular area from the UI perspective or feature perspective for a certain period of time and then switches to another area. It’s basically defined by the velocity of development of the team.

Finally as a product approaches maturity major development may halt entirely as the product has achieved the mission that the creators set out to create or a next generation product has been started to replace the product. The software updates become focused on minor features or just bug fixes.

This metric significantly impacts the value of automation especially in the early lifecycle and for areas under development in product mid-life. Because often the degree of rework needed for automation scripts can outweigh the value of creating the automation scripts. In addition, as automation scripts are brittle and time consuming to fix if they are constantly broken, they don’t provide significant value in the regression. The likelihood is that once the automation script fails or is broken either a manual test will be carried out or the test will be skipped in its entirety!

Determining whether a test case should be automated or AI automated is a non-trivial decision and can result in considerable resource wastage. At Webomates we have identified 6 key factors that can help in determining whether to automate or not. Alternatively you can use Webomates CQ service which makes that determination entirely Webomates’ problem! If you are interested in a demo click here

We look forward to hearing your opinion on the feedback form shown below.

Test Smarter, Not Harder: Get Your Free Trial Today!

Start Free Trial

Leave a Reply