Accelerate Success with AI-Powered Test Automation – Smarter, Faster, Flawless

Start free trial

Yes – AI is powerful.

But – AI can also hallucinate.

This isn’t a human vs. AI war. AI can generate test scripts and test data in seconds, identify trends, and potential defects. But without human oversight, it can also produce false positives, suggest irrelevant tests, or miss business expectations. So yes – Trust, but verify!

How AI Changes the Tester’s Role

As AI tools continue to mature, the tester’s role will increasingly resemble that of an analyst or a quality strategist, verifying AI outputs, guiding data relevance, and contextualizing false positives.

1. Tester to Analyst

AI can accelerate you to your destination, but it doesn’t always know the best route. AI can automatically generate the test cases and test data, but needs a quality check to ensure relevance.

The most impactful testers will be those who can shift their role from “creation” to “verification and validation”, ensuring that the machine-generated output aligns with business goals, user behavior, and real-world edge cases.

2. Verifying AI Outputs

Human testers don’t just run tests; they understand the “why” behind user behavior. The human-in-the-loop approach ensures that:

- Test cases match the actual user experience.

- Critical business logic is checked for exceptions.

- Business-critical scenarios are always included.

- Redundant or duplicate test paths are removed.

- All regulatory or compliance nuances are followed.

3. Guiding Data Relevance

AI can only do what it is programmed to do, and its outputs are only as good as the data it’s trained on or prompted with. Inaccurate or incomplete data can also lead to a biased model.

Hence, it is necessary to review the generated test cases to ensure the test case is structured and is relevant, has clear and accurate test steps, and consistent and maintainable automation scripts. They guide the system toward data that matters, not just data that’s available.

4. Contextualizing false positives

False failures can be due to script interaction with the browser, locator changes, or dynamic behavior of the application. Some of this complexity can be handled at the script level, but correctness can’t be 100%.

Testers interpret whether the failure has any real user impact. They use their domain knowledge, filter out false positives, and analyze test results with business logic. They make judgment calls that no algorithm can replicate. They ensure that the output isn’t just smart, but is also accurate.

Webomates: The Future is Hybrid, Not Human-Free

That’s what we do at Webomates – we harness the incredible speed and scale of generative AI to accelerate test creation, regression cycles, and defect triaging. But we also never forget this golden rule: “Trust, but verify.”

That’s why we blend the power of generative AI with the critical judgment of our experienced testers to ensure only the highest quality of test cases is delivered to the user.

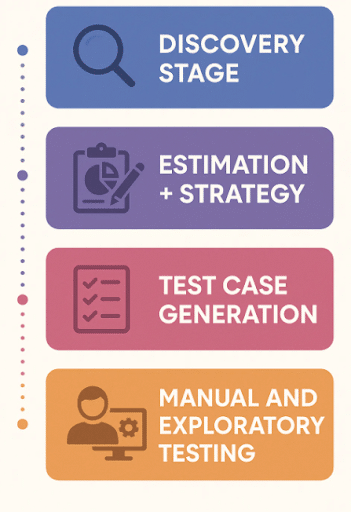

We have a step for checking the outputs by our experienced testers.

- Discovery stage: Review and refine test coverage with domain knowledge and business-specific understanding.

- Estimation + Strategy: Validate that the testing strategy aligns with business expectations, regulatory requirements (especially in domains like Healthcare or Fintech), unscripted paths, and edge-case scenarios. It ensures that unscripted paths and edge-case scenarios are factored into the plan, areas where automation alone may fall short.

- Test Case Generation: Review the generated test cases to ensure business logic is intact and all domain-specific test cases are covered.

- Manual and Exploratory Testing: Some areas, such as complex UI components, usability workflows, or nuanced user behavior, still require human intuition and require manual testing and exploratory testing.

The platform enables teams to accelerate their time-to-market by providing the framework, tooling, and accelerators to test their application across any industry type.

Click here to schedule a demo. You can also reach out to us at info@webomates.com.

Tags: AI in Software Testing, AI Testing, ai testing automation, Hybrid Testing Approach, Software Testing

Leave a Reply